Has anybody else submitted this Depth Canvas bug?

Esemwy

Posts: 578

Esemwy

Posts: 578

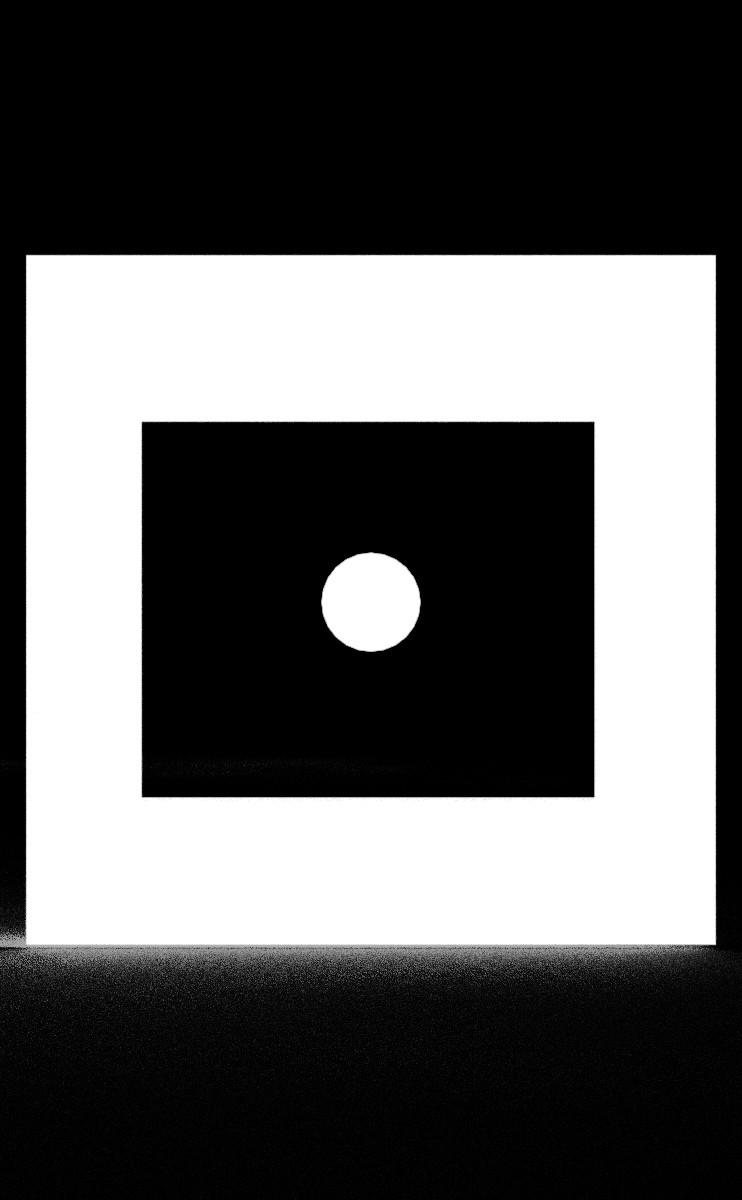

I've been trying, without much success, to submit a bug report through the Help Center. The problem is most easily illustrated with the following three images.

I rendered a simple scene with Iray Canvases enabled for Beauty, Alpha, and Depth. Depth fails to take transparency into account.

The below scene contains a red plane and a green sphere. The plane has an image provided in Cutout Opacity which makes the green sphere visible through the hole.

Beauty |

Alpha |

Depth |

|

|

|

|

I call this a bug or a serious mis-feature. I'm sure I've seen other mention of it in the forums, but I don't know whether anybody else has submitted a report. If you've encountered this "feature," chime in. Especially if you've submitted a ticket, speak up. Also, if you're willing, supply your ticket number, so I can convince the Help Center that I'm not insane.

Comments

I wouldn't call that a bug. As far as the renderer is concerned the geometry is solid. The transparency map on the cutout opacity channel simply makes some of that object less opaque. So for depth calculations, it's still a solid object.

It should be depth as far as light rays are concerned, not just the geometry. The entire point of depth is so that you can do distance effects in post. Anyway, the help center finally agreed to pass it to the developers this afternoon. Hopefully they come back with some sort of statement one way or another.

Have you tried your own LPE to see if it's different than using the "built in" canvas? It's been a few months since I looked at the expression syntax, but maybe there's something there that better deals with transparencies.

Speaking of transparencies, there are 3 (plus custom LPE) modes of it in the Alpha sub-panel. Not sure if this only affects only the alpha background, or anything with alpha-ized materials. Might also be something to look into. Or might not!

Depth calculations are based on the same principles as the old scanline renderer techniques (that were used for sorting to determine visibility of objects or polys.) Surface characteristics are ignored, so transparency won't matter. If you raytraced a line through it, it STILL hits the surface. It simply isn't refracted and spawns a new ray along the same trajectory and the surface would contribute no color. It's still a surface.

While it would allow for some neat compositing effects, I'd still bet they'll call it 'working as intended'.

If it is working as intended, then it's useless. So no z-depth maps for trees that use alpha maps for the leaves?

On another note, using z-depth maps that allow for transparency does create an inherent issue. Consider the following scenario:

Let's say white represents close to you, black far away, and gray represents the middle ground in your depth map. Now, let's say you have a 50% transparent window close to you with a far away object behind it. This window will render 50% white and 50% black in your depth map. It'll render gray even though neither the window, nor the object behind it, are in the middle ground.

An unavoidable limitation, I believe.

- Greg

Iray appears cognizant of the issue, which is why there are different alpha LPE modes. From the docs:

According to this, the default behavior should cause the depth map to see "through" geometries, and should respect the shader -- an object "transmits" solely from its materials setting (e.g., a "transmitting" material. This should include Cutout Opacity to 0, or when using a map in that node.

Greg, I've never seen an Iray z-map with anything but grays, and those deviated no more than maybe 30-35%. I tested explicitly with an object right in front of the camera, to infinity. There was no tone mapping I could do that would create a very obvious depth map. I did use Photoshop, anc CS5 at that, and maybe it's not very good for tone mapping 32-bit HDRs.

Thanks for posting this info, Tobor. I render with 3DL for my NPR work anyway, but I wanted to chime in because this concept is crucial to my work, and I am interested in Iray canvases. I could have sworn there was a post about tone mapping a depth map a while back. Will try to dig it up when on my computer rather than this iPad.

- Greg

I think you need to re-evaluate just what a 'depth' buffer render is. It is traditionally used to determine what order (from camera perspective) objects appear in...i.e., how far from the camera the objects are. In old scanline renderers, this let them draw from back to front, and just overwrite further objects, thus generating correct object visibility.

A transparent object is STILL a solid object. If you use the above example images, the red plane is STILL in front of the green ball. Even if part of it is transparent. This is the difference in using a plane, and using a mesh that actually has the center section cut out. As far as the depth buffer is concerned, transparency doesn't exist. ALL objects are treated as opaque.

What would it do if your concept was correct and the central part of the red plane was partially transparent? Translucent? Depth is a question of distance from the camera along the view direction. The plane is still a solid object, regardless. Raycasting techniques use the depth buffer to determine WHAT needs to be tested against first. Your way would have it test against the ball BEFORE it tested against the (potentially partially) transparent plane. This would render the ball 'in front of' the plane, since the green ball isn't transparent, and no further rays would be spawned. This is what a depth buffer is used for. Not sure what you are hoping to use it for.....

Tobor, LPE is about light paths, not depth. See http://blog.irayrender.com/post/76948894710/compositing-with-light-path-expressions

@hphoenix, if what you say is true, then depth is entirely useless in post processing. Almost all scenes have transmaps (hair). Iray/Studio should be producing a depth map identical to what it uses in depth of field and volume effects. It's obvious that Iray has this data internally, because those things work. Whether it's called "depth" or something else is semantics.

@tobor, it might be possible to produce what I want using an LPE. If the help center doesn't respond with something useful, I'll probably embark on that journey. Stay tuned.

What do you want then ? if you have an object 5 meters away that is hit by a ray, so the depth value will be 5 meters, if the object is 50% transparent it is still 5 meters away, what should the depth value be... 5 meters + half the distance to the object behind it or something ?

The depth value is the distance to the geometry, no matter how transparent it is it's the same distance, that's how it works and have worked since the stone age.

The problem is that transparency maps are used for things it should not be used for, transparency add transparancy to geometry, but the geometry is still there, most other software has a clipping feature (often called clip maps) that is used for clipping away parts of geometry that would generate correct depth values, but I am not sure if such a thing is available in DS/IRAY (I don't use it for rendering).

But hair is often transparent to some degrees and in that case a clip map will not work, but once again, what kind of depth value should you get from a 50% transparent pixel ?

Tobor - it was actually Esemwy that had posted on the subject before:

http://www.daz3d.com/forums/discussion/comment/859750/#Comment_859750

BTW, just wanted to say thanks again to both of you for taking the time to look into LPE as it relates to DS, and especially for generously sharing and documenting your findings!

- Greg

It's important to step back and consider what these canvases are intended for: compositiing. Even if depth maps have been used in the past strictly for defining the z distance or the visible obects in the scene, that use carried into the realm of modern compositing is virtually useless. An object in a mirror has a "depth" of camera-to-mirror + mirror-to-object. Compositing processes need to know ths. This is a physical reality that cannot be ignored. It is reasonable to expect modern PBRs will take this into consideration, regardless of the traditional use of z-buffers.

Iray supports two "depth" canvases, and Depth may be the wrong one to use for this, though both are described as distance to a hit point, and Distance specifically discusses ray paths. From the documentation:

depth

A Float32 canvas that contains the depth of the hit point along the (negative) z-coordinate in camera space. The depth is zero at the camera position and extends positive into the scene.

distance

A Float32 canvas that contains the distance between the camera and the hit point measured in camera space. The depth is zero at the camera position and extends positive into the scene. The distance is related to depth by a factor that is the inner product of the (normalized) ray direction and the negative z-axis (0,0,-1).

@Esemwy, It is still useful in post-processing, as well as for internal processing during renders, just not in the way you are wanting to use it. Since every pixel will have a certain 'depth' (usually 16-bit greyscale), if you want to insert something into the scene (i.e., compositing) you simply have to know the 'depth' at which you want to insert it. Now, agreed, you have to handle areas where there is transparency (full or partial) to match, since a depth map won't take those into account.

Depth maps were never meant for serious post-processing work.....just basics. Depth Maps were originally used internally for sorting objects/polys and allowing the render engine to cache the data efficiently. Much like Shadow Buffers, Light Buffers, and other 'image' based speed-ups used internally during rendering. They can be exposed for use, but they aren't really made for doing post-work......they can sometimes be adapted for it, but that isn't what they were designed for.

Nvidia has gone out of their way to discuss the use of canvases, including depth, as part of a robust compositing process. In fact, they have been selling the renderer on this basis. See for example:

http://www.nvidia-arc.com/products/iray/rendering.html

Scroll down to 'NEW: Compositing Elements and Light Path Expressions' This is just one example in sales literature and blogs. There are many others.

This quoting system is for the birds - I think hphoenix authored the post you quoted. I've already said that I think the depth info is useless without opacity map info. Whether it's dumping a buffer, or rendering a pass specifically for the purpose of generating a depth map is irrelevant.

- Greg

Greg, Right you are! I tried to fix it when I first wrote the post, and messed up. I'll see if I can edit it, so my change will reflect proper attribution.

Thanks. Figured as much, as I've wrangled with it (and lost), too.

- Greg