Adding to Cart…

Licensing Agreement | Terms of Service | Privacy Policy | EULA

© 2024 Daz Productions Inc. All Rights Reserved.You currently have no notifications.

Licensing Agreement | Terms of Service | Privacy Policy | EULA

© 2024 Daz Productions Inc. All Rights Reserved.

Comments

Ditto

This isn't really supposed to be an AI thread, so I will be brief.

AI is doing, and getting better at, what we (humans already do).

I'd argue that we humans train on images and experiences that we have seen, and then assemble it into the art that we make. We study actual objects like fruit and wooden bowls, or directly look at fruit in a bowl, and copy/interpret what we see.

AI "art" is moving past just cutiting and pasting what it knows/has seen.... It is getting to the point where after digesting 50 million pictures of a Rhinocerous, it can make a Rhino, in any degree of realism or art style, from any angle... etc etc... But the USER of the AI, is still really the artist... it feeds the text prompts in, tweaking until the results convery what the text prompter (artist) needs/wants.

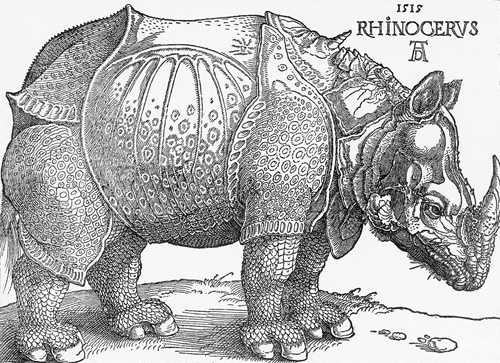

For context, I'll post a picturer of Albrecht Dürer's Rhinocerous.

For those that don't know, Dürer created his famous woodcut of a rhinoceros in 1515 based on a written description, as none had been seen ever before in Europe. So, he used TEXT PROMPTS to make his art.... interesting.

AI is rapidly moving past the point of spitting out/cut in paste as you are describing it. It won't need to see 'more' images, to get better, it gets better as the software and hardware get better. So as the software improves, as the hardware improves, The AI will need less and less to get better results.

So, to bring this back on topic... My question is, what is going to come first, advancements in AI or Daz 5? If Daz 5 takes another 1.5 years to hit the door, methinks (that is I think), Daz just may be obsolete.

Because, honestly, Daz is no different that AI in the way you are describing it. AI Machine learning is comprable to Daz & Assets. Daz 3d 4.15/Daz 5 only "knows" what you've bought from its store. You take the items you buy, assemble them in the viewscreen, and take a picture of it/render it. You can't render images of what you don't own (or what the machine hasn't learned). And while you can make things in a pinch with the daz software, It really isn't a creation software like Blender, ZBrush, Maya etc.

The ability of AI is growning very rapidly... enough so that you can make a commercial in about 3 hours

At the end of the day, my allegiance isn't to any particular software. I just wanna make art. If I can do it without spending $1000s on assets and hundreds of hours trying to pose a hand, or getting Key frames squared away, all the better.

Remember, AI image generating (DALL-E et al) have only really been available to the public since 2021-ish. That is bascially 2 years. Two years ago, what version of Daz were we using? 4.1xxx?

We MIGHT have Daz 5 in 2 more years... But where will AI be then?

Sorry if i shift the focus away from the AI topic (which i find extremely interesting), but what a dream would be if Daz 5 came with SoftBody Collisions? It will almost certainly not (not much emphasis on "almost"), though, but a guy can dream...

Yes maybe AI supported? <duck and cover>

Rhino like here?

> https://scontent-dus1-1.xx.fbcdn.net/v/t1.6435-9/188162556_920776668763373_1616585628964472988_n.jpg?_nc_cat=110&ccb=1-7&_nc_sid=973b4a&_nc_ohc=XbgXzRhUyQoAX8V6tV4&_nc_ht=scontent-dus1-1.xx&oh=00_AfA27L2s3qIuxw1Ce9Blk3-T6Z_B1nFyyxtxhfx90MWk9A&oe=649F2C7B

On the subject of AI art, this blog post has an interesting take on it. (Fair warning, some of the other blog posts there contain NSFW images, but not that post specifically.)

https://arianeb.com/2023/06/01/ais-dont-know-how-to-be-awesome-and-will-always-suck/

One of the points the blogger made is that interest in AI art might continue for a good part of the 2020s then fade out from the masses by next decade or so, but the tool itself will still be used for some while. But the blogger also points out that AI is mostly going to create bland-ish art, because its based purely on processing lots of other material by humans that it sees... quite a lor of which is also bland. Also, its mainly just a very sophisticated mimic, and can't really cultivate a sense of humor... which is to say, it can't be spontaneous and intientionally funny.

https://www.daz3d.com/daz-bridges

"Nvidia Omniverse, Coming Soon to DAZ 5"

...so what does that mean for Iray?

Iray is still part of Omniverse, it's the path tracing renderer. I'm a little disappointed in going the Omniverse route instead of proper USD support (including Hydra) but I understand it's the "lowest hanging fruit" for Daz and nvidia is probably providing a lot of direct assistance. I just don't like that nvidia is basically going off on their own while the rest of the industry is collaborating on making USD work in a more open fashion.

Awesome now I definately need an RTX 4090

That's interesting and all, but I'm more interested in "DAZ 5 coming soon to customers".

I mean, technically, "Filament for Mac Users now available in DAZ 5" was true in August, 2021.

-- Walt Sterdan

Clarification: Typically it does not calculate :). It's banging something onto something else, and if you're lucky, it works.

The last thing i expect to be better is light ray calculation, unless you mean faster, e.g. because faster and good enough at the same time is better. Rays can be calculated, clearly. But creating animations from random videos may be a typical machine learning case, where you don't want to employ "classic" algorithms only. Expecting light ray calculation to be precise (from a generative system), would be like expecting correct in-depth reasoning from a large language model (-based system) like ChatGPT ;.).

Of course there could be a thousand shortcuts, also with specialized tools, like for reflections on water for a character, but this certainly will be along the lines of "good enough", rather.

Iray's method if sending out multiple rays and hoping does seem to me to be a brute ignorance & force solution to the problem. It seems to be along the lines of solutions I'd come up with. I feel that there should be a better, more considered, efficient approach. Also, as the convergence increases you don't see a speed increase, which I'd expect if it was only applying the processing power to the currently unconverged pixels instead it appears to keep considering all pixels in the image, which does surprise me.

What do I know, I'm only a mechanical engineer who also writes software tools for himself to use, not a software engineer.

Regards,

Richard

Iray is a light simulator, in effect - shortcuts would be more the area of a biased render 9as far as I know that is what makes them biased - that they don't do everything but have rules). Though Iray does have some guidance (for example, on concentrating on openings like windows and doors for enclosed spaces with external light sources) I believe.

I'm not sure that it coudl stop processing pixels entirely, given the way the algorithm works, but it certainly doesn't - you can leave a rnder chugging away and it may well improve.

It's not so brute force. Like most modern path tracers, it employs a number of heuristics (generally referred to as Next Event Estimation or NEE) to speed up convergence. But in order to properly model things like microfacet behavior (surfaces that are modelled to be "rough" or prone to scattered reflections) need some randomness to get an accurate look. And when dealing with randomness, there is generally only "good enough" never "perfect". Also, since iray is predominantly a GPU renderer, there are limitations to the sort of methods it can use, at least until GPUs evolve. This is why some CPU-based renderers (like Corona) produce really clean and accurate images (at the expense of slow performance), because GPU memory access patterns are very limiting (even if fast).

That's normal, you would generally expect "diminishing returns" when it comes to iterations vs convergence. It is however possible to take convergence data and start distributing samples where they are needed most; this would be Adaptive Sampling, which iray does not have. They do however support Path Guiding (aka Guided Sampling per their terminology) which can improve convergence in some complex reflective/refractive scenarios like caustics. This has only become practical on a GPU in the last few years.

...I wish there was an Daz interface for the newest Rednerman.

This argument has been debunked multiple times. Humans can be creative in how they draw, sculpt, ect in ways that AI simply cannot. We do not duplicate the object perfectly every time, or at all in a lot of cases. We do not draw upon only what we have seen, but what we have heard, touched, tasted, and of course...our emotions. No matter how complex or amazing AI generators may get, they do not do any of this. They are merely filtering out pixels in a way that results in an image, it is pure math. That is what the "diffusion" part of Stable Diffusion is all about. The training data is turned into noise. Any prompt takes noise and fills in the pixels using its data set. The noise pattern is called a "seed". This is an important detail. Because it is math, the results can be perfectly duplicated every time.

In the example provided, Dürer had to use his own creativity to decide where to put each feature of the creature. The AI generator does not do this, it uses math to determine what each pixel should be from its data set compared to the noise pattern. That is a huge difference. Keep in mind that Dürer had no data besides a text description. If an AI did not have 50 million pics of rhinos in its dataset, the result would be a horror show, and I seriously emphasize how horrific it would be. Try asking AI to draw something it never was trained on, it simply doesn't work. The result is completely alien and freaky. However a human drawing something they never have seen might still draw something comprehensable, like Dürer.

If you use the same prompt with the same seed and settings on another computer you will get the exact same result, again because it is only math. You cannot get the exact same result from human art so easily. If you go to another human, and give them the same prompt and the same brush and tools, this different human will not create the same image that the first human did. Again, Dürer is a prefect example. What if they asked somebody besides Dürer to draw a rhino? It would look very different, even if they had the exact same pens and paper, even if they were in the same room together, even if they studied together. Because that human is using their own personal data sets. You can have wildly different computers. One computer might be in a shop and dirty. They can have different parts, with different drives and be used by wildly different people. But if these computers install Stable Diffusion with the same settings and prompts, they will get the same results. That is because diffusion is just a complex math equation, and nothing more. People have creativity. Even those who lack creativity, are still more creative than the AI. This is the difference. The prompt is the only aspect a human can be creative with, but even here, the prompt is largely just a formula for the AI generator to compute.

The US Copyright Office has already clarified their ruling, you can copyright a prompt, but not the image created by an AI generator. To be able to copyright the image, you need to do more as a human to make that image your own. This seems logical to me. That doesn't touch on the potential issues with the training data itself, which becomes a different topic.

That doesn't mean AI has no place. Some people might think I hate AI art and am totally against it, when actually I am not...it just needs proper rules and regulations first, with ethical traing data. There are plenty of people using a modeling program to create a base image that they insert into Stable Diffusion to produce a result. This part of AI is far more interesting to me, and has more human input (which might be enough satisfy copyright). But you do not need Daz Studio for this. You can use any modeler, anything that has a human like character. It doesn't even have to be realistic, some people use stick figures! You can use a model that is monotone in color, and actually it probably works best this way. But that means any model in any modeler will do. Even in Daz, you don't need a fancy skin or any morphs, just base Genesis, any Genesis, in a monotone color.

If you put the effort in, you can train a branch of Stable Diffusion on a specific character, and get consistant results across different poses and perspectives.

In this respect, an AI in Daz could be interesting. It would have to be a post process like the current denoiser. Perhaps they could have a new denoiser like feature that is capable of adding new details to the image while it renders, with a slider controlling how strong it is. They could have a face enhancer, kind of like Topaz AI, which adds details to the face not present in the 3D model and makes it look more real. Or it can add alter the background to look more like a cyber scape or whatever you ask of it. But you can do these things outside of Daz Studio with in painting, so I don't know how useful they really would be, other than be a marketing bullet point. I suppose if they are easy to use, that could help, since doing local installs of Stable Diffusion can be confusing and resource heavy. But even here, IMO it would not be logical, as the AI would be effectively competing against Daz store products. Why would you need buy a cyber scape environment if you could just ask for one from the AI during a render? It would not be compatible with the Daz business model.

I do think AI generators could be bad for Daz Studio as it could pull away some users, but Daz will always have its dedicated users for one reason or another.

I am aware of the beta test for the Tafi text to 3D character thing. It is a totally different beast from image generation. It is essentially a text prompt controlled Daz Studio from the description.

Maybe I should start my reply by saying, I never made a claim that AI is the most creative tool, or is better, equal, less than, a Human artist....

But I was comparing AI to Daz... and I think that there are similarities. And your last paragraph or so (quoted above) speaks to the point I was trying to make vis-à-vis Daz versus AI.

At the end of the day, throw Aiko into a scene and dress her up... how much different will she look than anyone elses Aiko? Yes, you can go to your morph dials (previously purchased) and make her nose fatter.... or with AI, use a big fat nose prompt.

Daz may always have its core users... but as AI and its controls get better (and for the sake of argument, AI art for the masses is really only 2-3 years old now), that dedicated fan base may find it harder and harder to justify that $129 Surfin Safari Bundle when all you'll have to do is put in the prompt words "... Realistic sufer on a red surfboard riding a 60' wave"

Time will tell though.... I just hope Daz 5 gets here fast.

DAZ Studio is allowing me to create images, which is certainly dumbing down the pool of artistry. I would hope DS5 could help me make better images so I can make up for previous dumbing-down, but I don't really think it'll happen. More's the pity. Will AI help me? I wish.. but I doubt that too.

Regards,

Richard

What AI Art does is force DAZ 3D to figure out a way to integrate iRay & DAZ Studio into AI embellishing the results to be better and faster than what their customers get now. AI art might not cause DAZ to loose the majority of it's current customer base but it will divert the majority of potentially new customers from ever trying DAZ 3D. AI & AI art are very regularly in the papers and news shows, something that is not the case with DAZ 3D.

I know this is speculation (as is most of this thread) but from what I have read, DAZ is looking at ways to start a sort-of subscription scheme by making the entire store content available for AI creations. Of course that content would need to be paid for but, I assume, on a per-use basis. So, in effect, we would have to pay each time we use content but never actually buy the content. Alternatively, there might be the option to only use the content in your own library for AI creations.

I do hope the second option is included otherwise I will be one of those giving up on this hobby. One the other hand, I'm very interested to see what comes of the adoption of Omniverse although I would have preferred just USD without the NVidia lock-in.

I just thought of the ultimate nightmare alternative: DAZ5 might be online only - running on cloud-based servers with only a web interface. Oh dear, why did I have to imagine that?

That would definitely put a stop to me using it

This is not going to happen, for two reasons.

Firstly, it would cost a huge amount of time, effort and money to port DAZ Studio to a web only interface.

Secondly, it is one the fundamental principles of business that it is much easier and cheaper to keep your existing customers than it is to find new ones. DAZ is not going to risk alienating its huge existing customer base by doing anything like this.

Cheers,

Alex.

Think I'd prefer a few 'probably's in those assertions, Alex.

There are one or two companies that have made apparently irrational decisions and made a serious dent in their business (Think of Twidder's recent issues due to their new owner, and Gerald Ratner killing his jewellery business 'Ratners' with one unguarded statement https://www.businessblogshub.com/2012/09/the-man-who-destroyed-his-multi-million-dollar-company-in-10-seconds/ ). I'd HOPE DAZ won't behave like that, and it's highly unlikely, but, unless you're the one making the descisions it's impossible to rule them out completely.

Regards,

Richard.

There is no sign whatesoever that Daz is even considering moving to an "online only" system - why would they be updating DIM, and adding in features from Daz Central, if that was on the cards? How would it even work? I appreciate that nature abhors a vacuum and in the absence of actual information it is tempting to speculate wildly, but this feral speculation is pretty much untenable as far as I can see.

Don't know I didn't expect Maxon C4d to go online subscription when it did

You mean some kind of activation system, for a local install? I would also regard that as highly improbable - the application is currently free, it's not like the switch from a paid for permanent license to a rental scheme as perpetrated by Adobe, Maxon, and co. (Though I thought Maxon did still offer permenent licenses for at least some applications.)

Sorta it's perpetual sbscription for $3500.00 for the C4d

I see Dartanbeck published a new guide to animation for DAZ Studio, this means you guys are finally going to fix the animation tools and all related stuff?

I'm stuck to DAZ Studio 4.12.0.86 since centuries due to the awful bugs on the animation tools in the latest releases.